Administravia

Here is the first beta description of the academic program analysis software named Administravia.

Academic administration does not have to be death by 168,241 mosquito bites...

This software has been designed to aid in the planning, implementation, and monitoring of data-driven curricular change and college/school-level annual assessments. It is a component of a forthcoming text on Intentional Curriculum Design: A Data-Centric Approach for STEM Curricula and is available on MacOS, iPadOS, and iOS platforms.

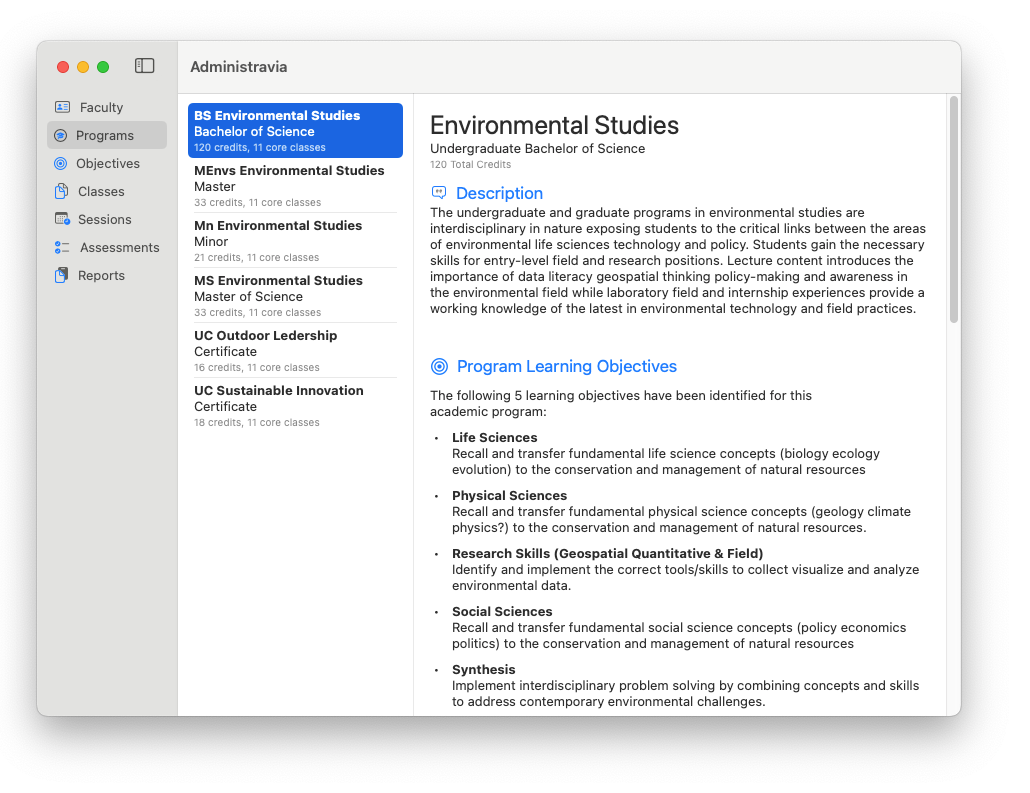

Academic Program Management

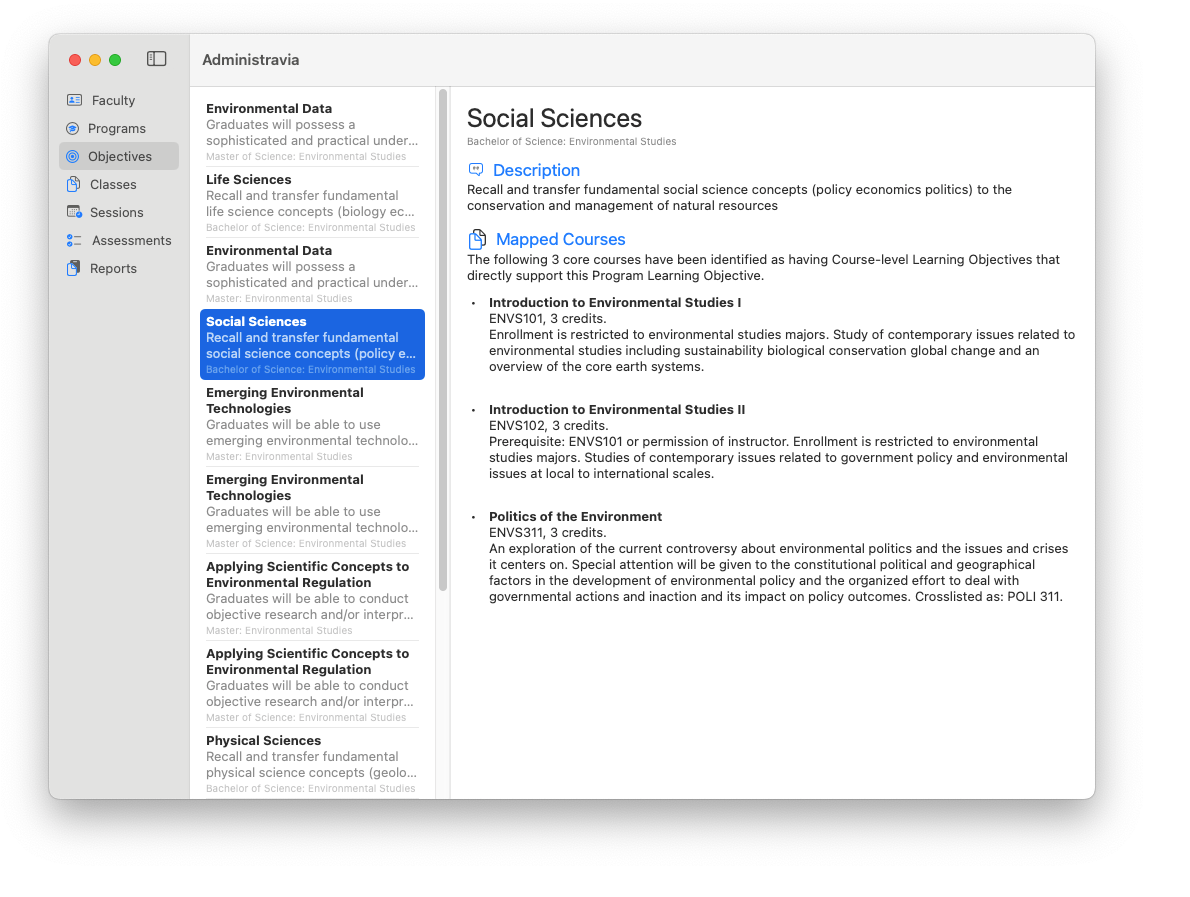

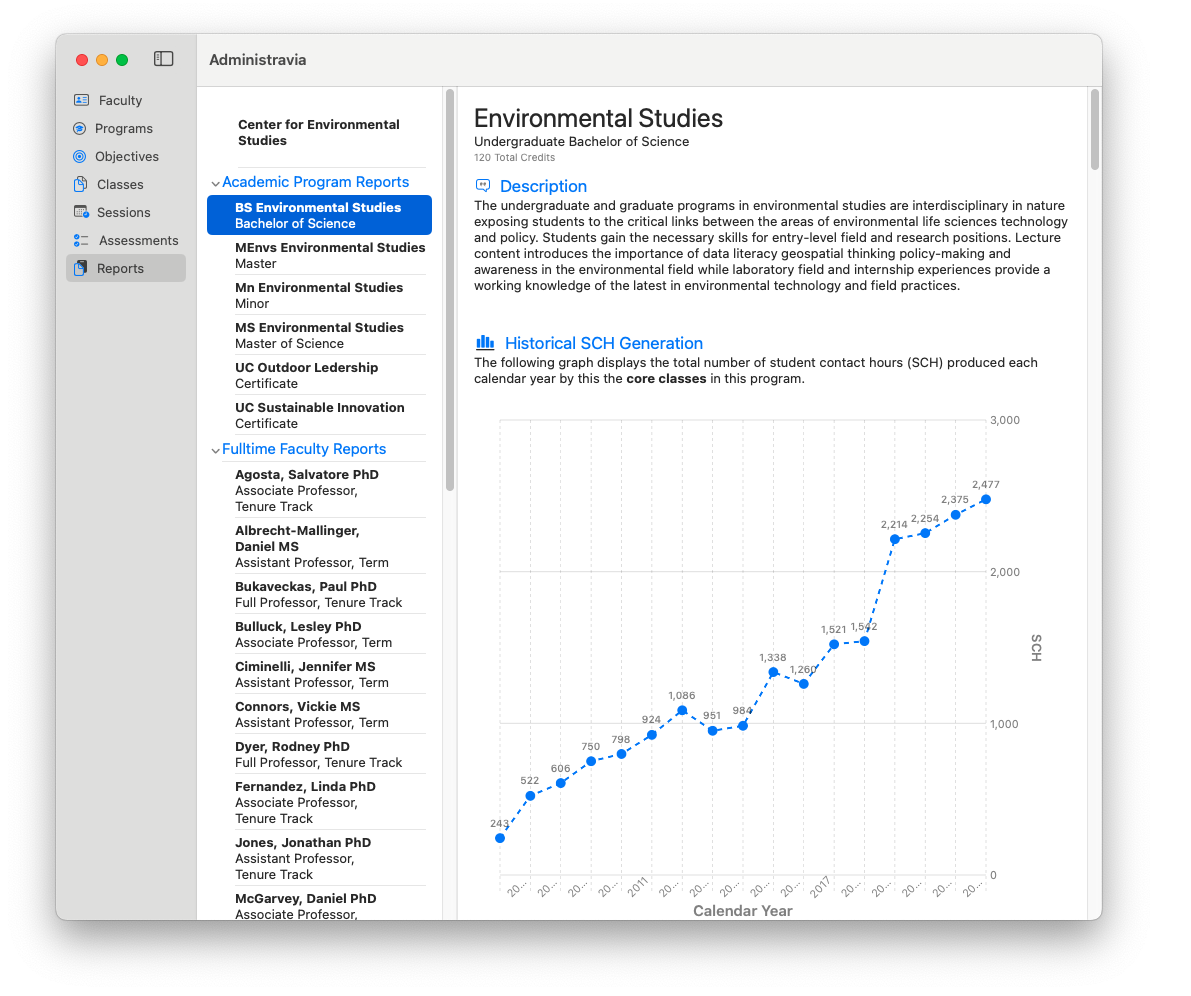

Each academic program is defined by the courses that contribute to it and how these courses are aligned with pre-defined Program Learning Objectives. In fact, every course-level learning objective needs to be mapped directly onto the Program Learning Objectives.

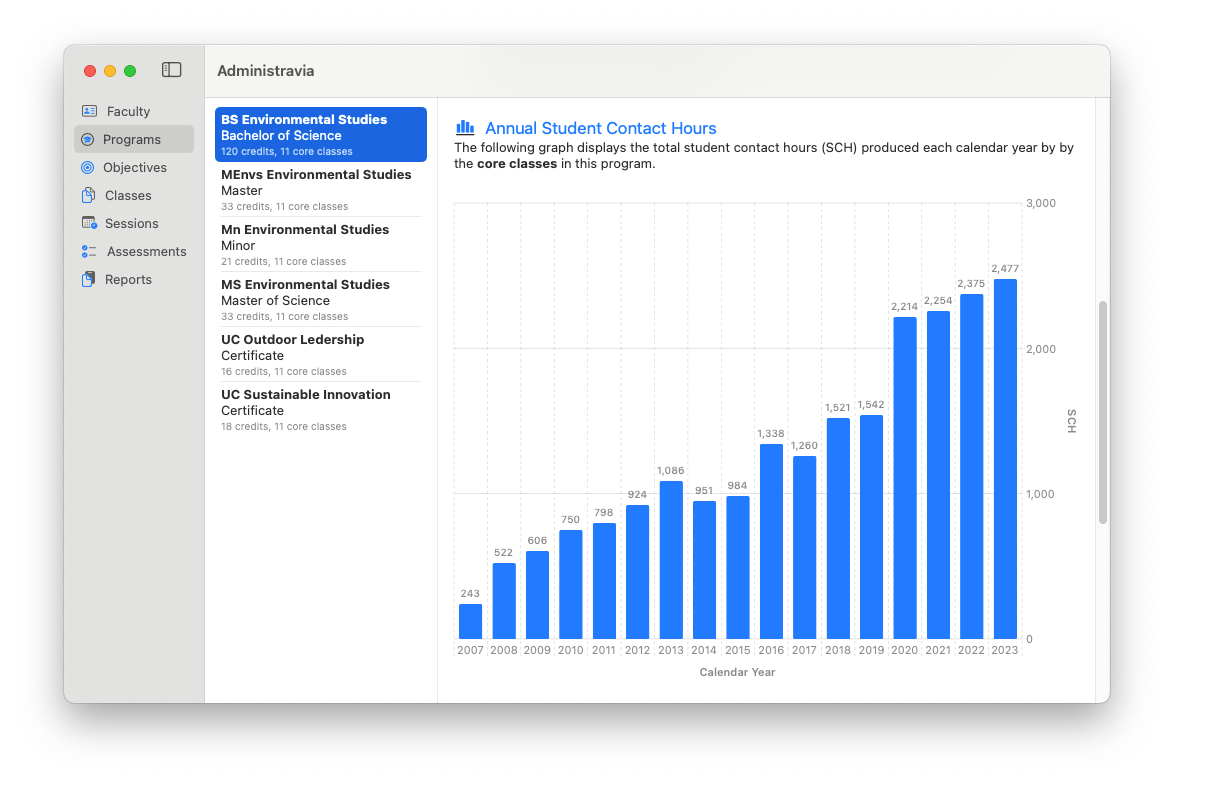

Program metrics where you need them.

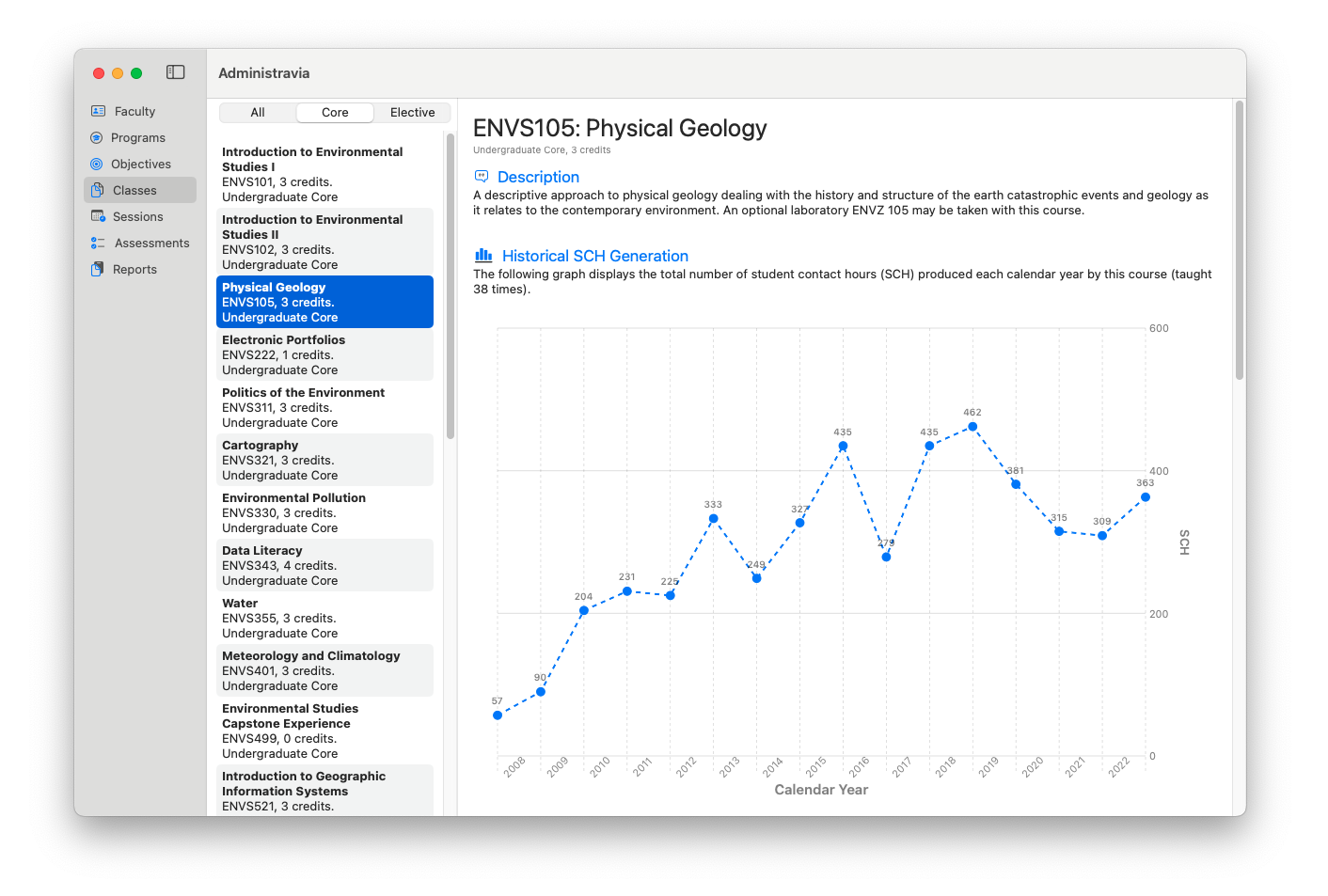

One criterion for program development success is the number of students in the program and their generation of contact hours over time. Students are drawn to dynamic programs that offer the skills, knowledge, and hands-on experiences they see as critical for their professional trajectory. They will vote with their feet, and as a consequence, program changes that generate more interest indicate success.

Program Learning Outcomes

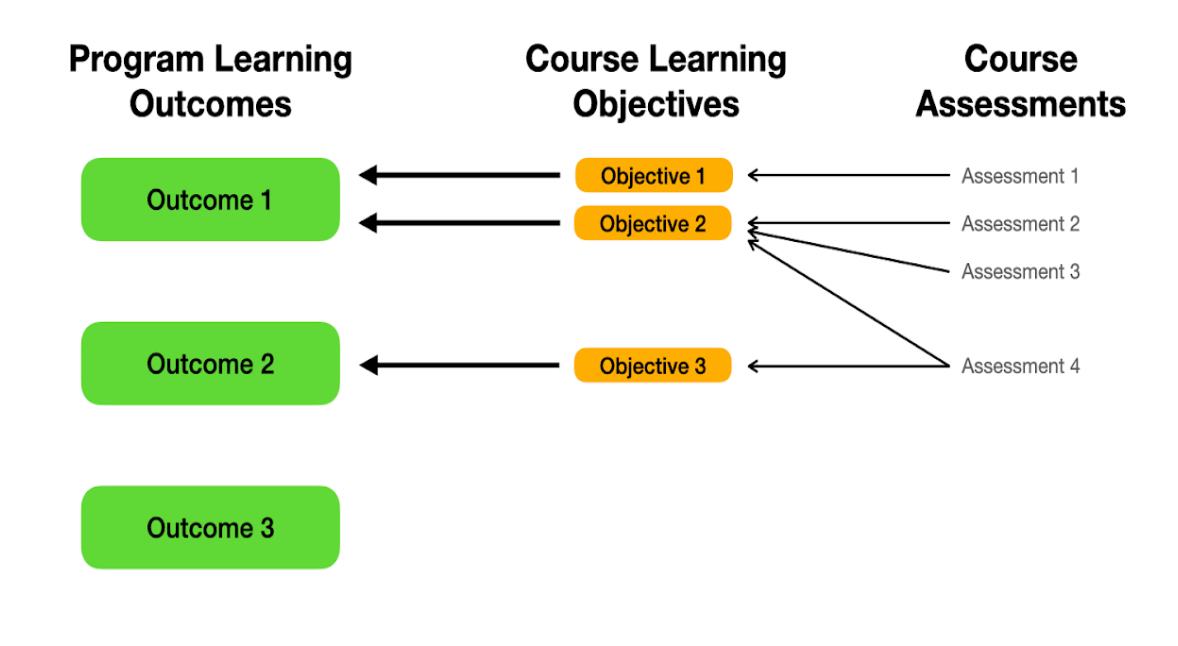

Program learning outcomes are the CORE component of any academic program. They must be defined first, and then the expected and anticipated outcomes must be set. Each learning outcome must have an unambiguous description and target a directly measurable learning component.

Each Program Learning Outcome must also have specific courses in the core curriculum and component assessment tools that can be traced through the course learning objectives as evidence of skill, knowledge, or hands-on experiences.

Incomplete or missing links within this 3-level mapping suggest incongruence with outcomes, objectives, and expectations.

- Course assessments that do not map onto course learning objectives suggest that students are being evaluated for some content that is not the specified domain of this course. This may be evidence of busy work or incomplete coverage of what the course is intended to provide.

- Course learning objectives that do not map onto programmatic-level expected outcomes raise questions such as, “Is this course really CORE to our program?” and “Do we need to re-evaluate what our learning outcomes should really be since these courses are developing learning that we are not saying is CORE?”

To empower faculty to develop their curricula in this intentional format, I have created Backflow.Studio, which significantly simplifies this approach in course development and imports into Administravia.

Course Assessments

Administravia facilitates both direct and indirect assessment techniques. Direct assessment is typically derived from some measure of either individual or aggregate student performance as determined by the instructor/professor overseeing the content delivery. Indirect assessments involve having the participant provide feedback based on their perceptions of the course, instructor, content, or other learning components. Both direct and indirect assessments are quantified each time a particular course section is offered.

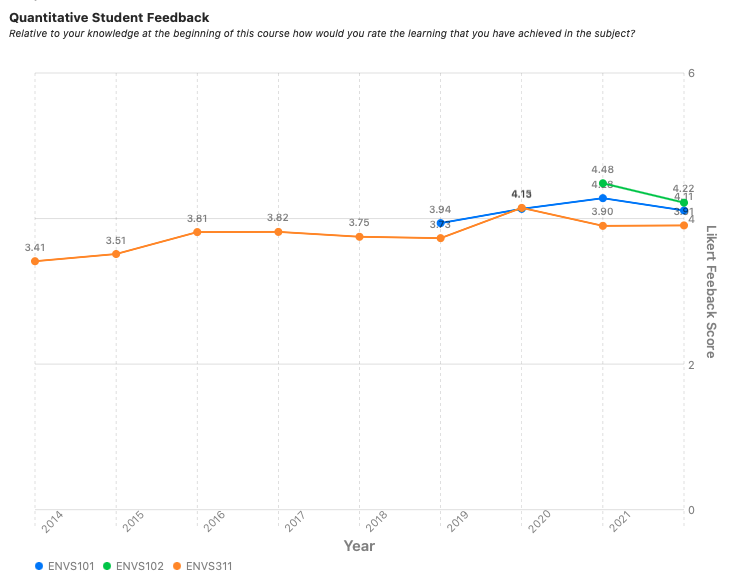

This is an example of an indirect assessment question regarding the perception of the topic given the instructor's classroom sessions across various metrics (including Likert, Binary, Divergent, Continuous, and Categorical levels) and assessed across academic years.

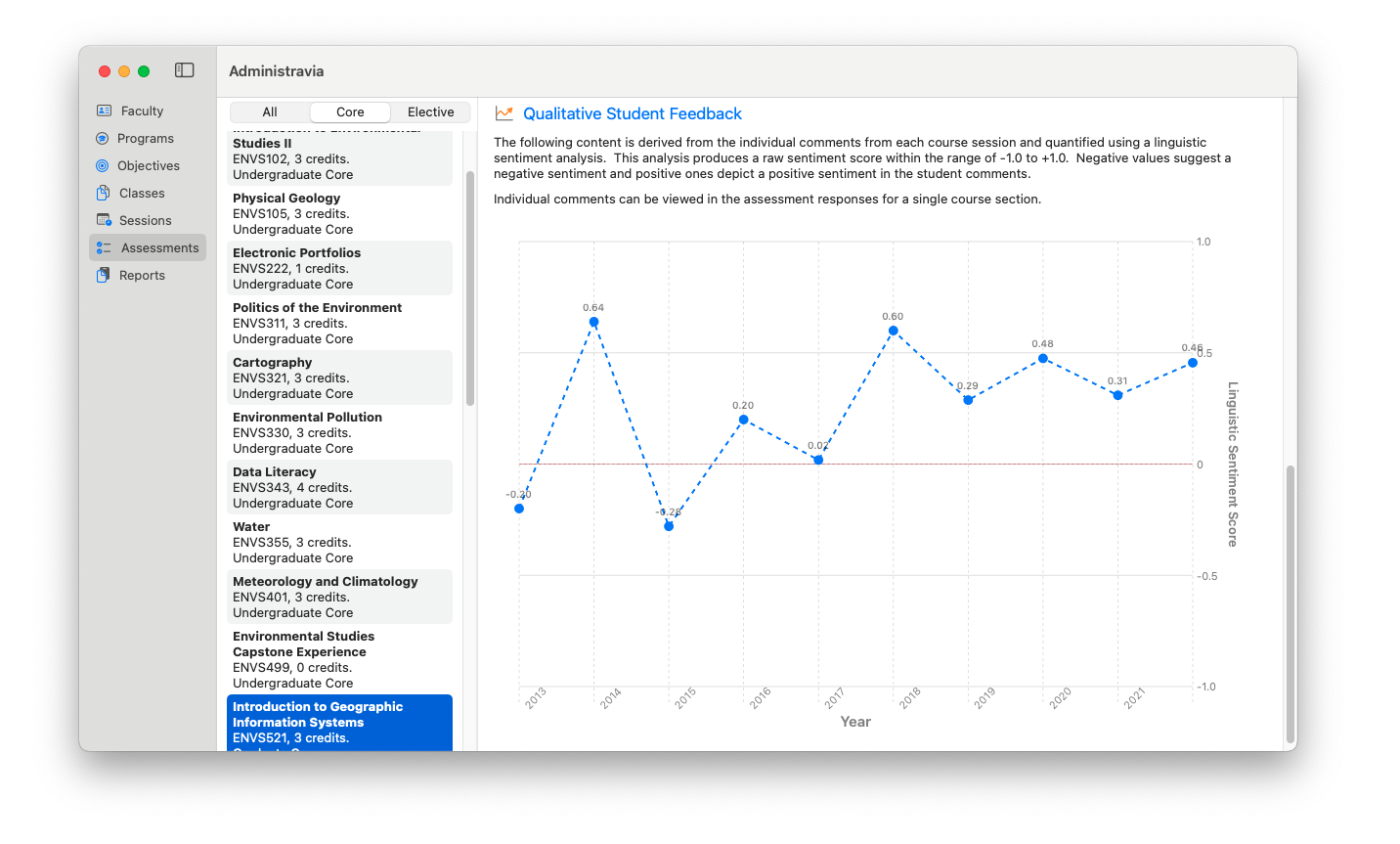

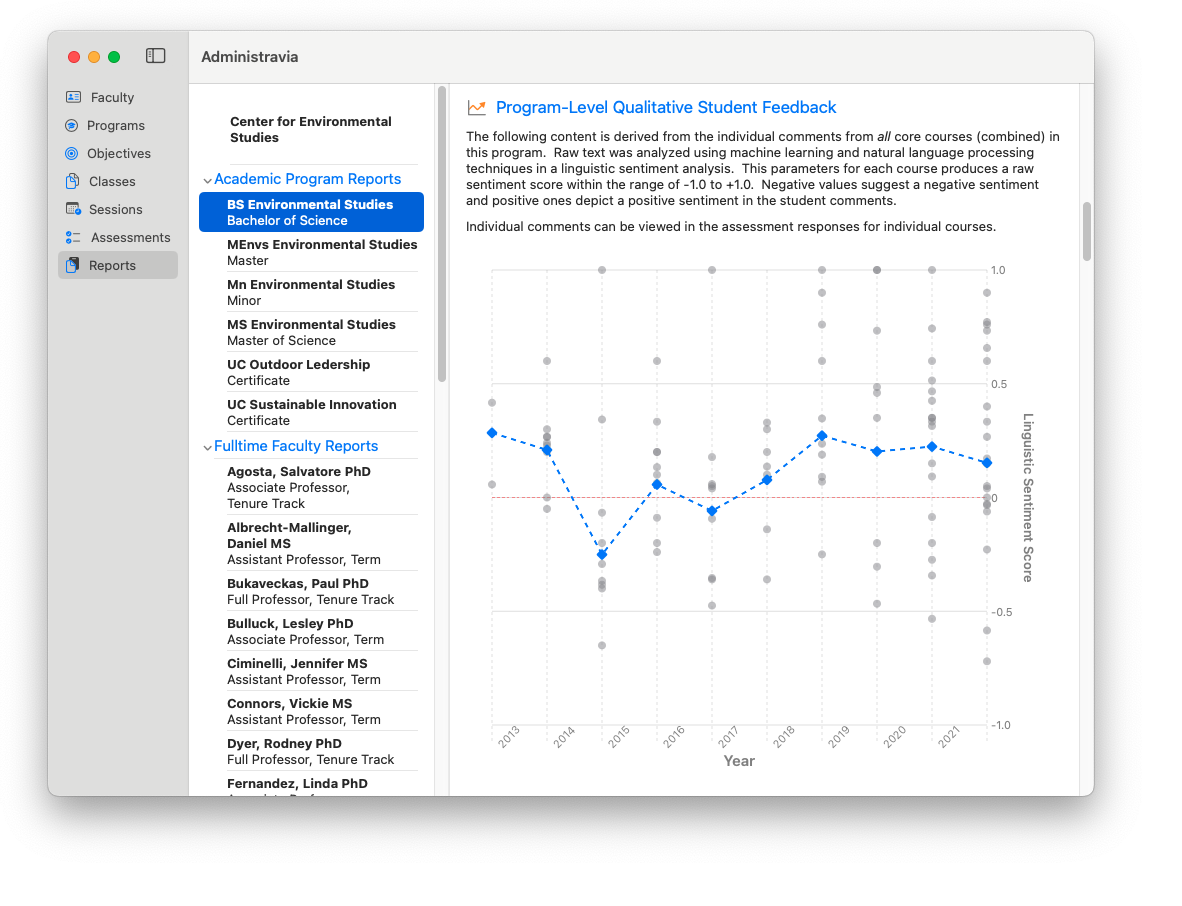

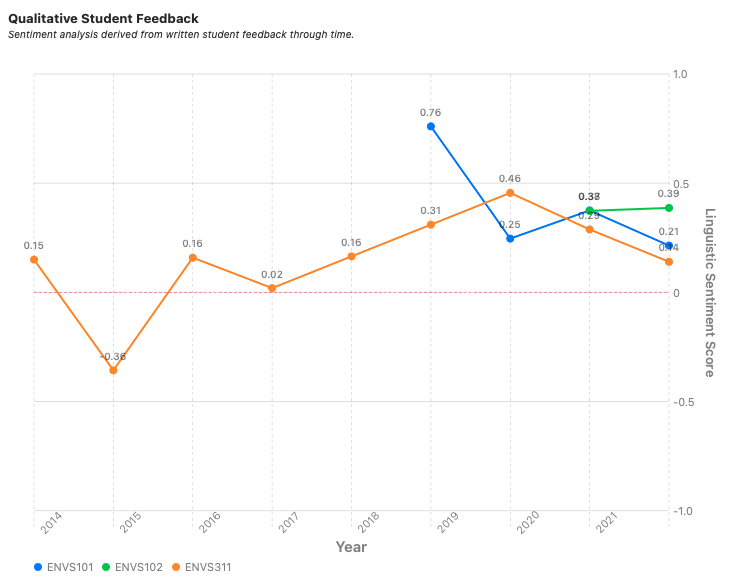

Administravia also uses machine learning and Natural Language Processing tools to derive overall course sentiment scores based on the written review content for each session. Here, each student's written free response summary of the course is converted into a linguistic sentiment score, bound on the range -1.0 … +1.0 (negative - neutral - positive) and then averaged across years. This captures a qualitative temporal measure regarding how written student feedback.

Multilevel Report Generation

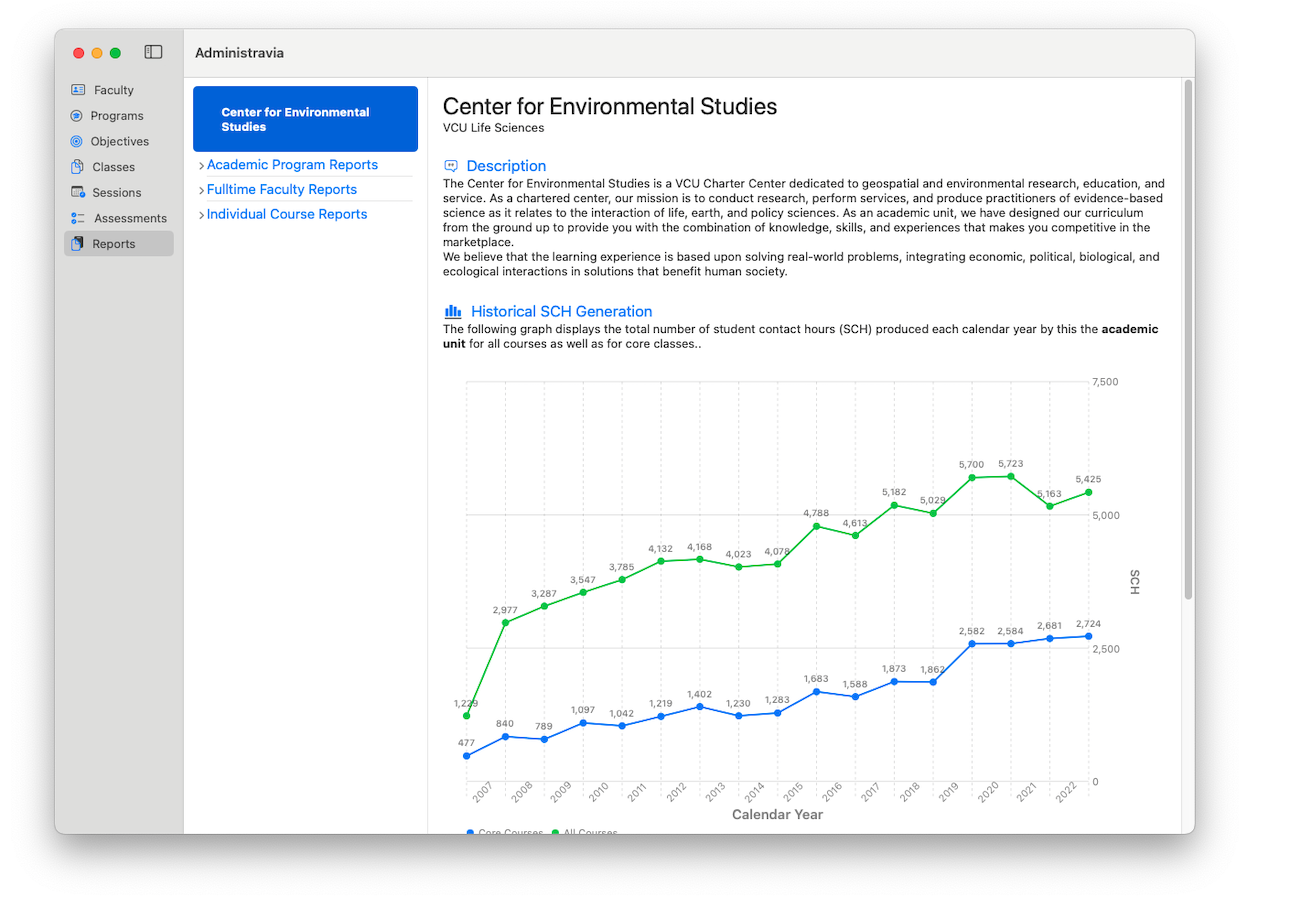

Unit-Level Metrics

Each academic unit can be evaluated for the programmatic and faculty output each reporting cycle. Reporting information may include:

- Annual student contact hours generation for core and elective courses in all programs supported.

- Core and Elective DWF rankings and trends.

Program-Level Metrics

For individual academic programs, annual reporting may take several forms, summarizing trends in direct and indirect assessment and performance metrics such as core class seats filled.

Or qualitative feedback from exit interviews, end-of-term course evaluations, internship/independent study assessments, or any other method where student written feedback is provided. These are processed using machine learning to produce natural language sentiment scores and can be evaluated for temporal trends.

As individual courses are mapped onto Program Learning Outcomes, the program's performance in providing the skills, knowledge, and experiences that directly align with the Introduction, Reinforcement, or Final Assessment related to each Program Learning Outcome can be assayed.

Here is an example of an indirect assessment of the courses that map onto the BS in Environmental Studies Program Learning Outcome:

Social Sciences: Recall and transfer fundamental social science concepts (policy, economics, politics) to the conservation and management of natural resources.

Since 2014, the unit has asked the same question and recorded every student's response on a Likert Scale (1 to 5, low to high). The score for each course that maps onto this Program's Learning Objective is shown below. You can see the new courses that have been added to the CORE curriculum and how they track over time.

We can similarly derive qualitative assessments directly from the student-written feedback using machine learning and natural language processing to estimate a course sentiment index.

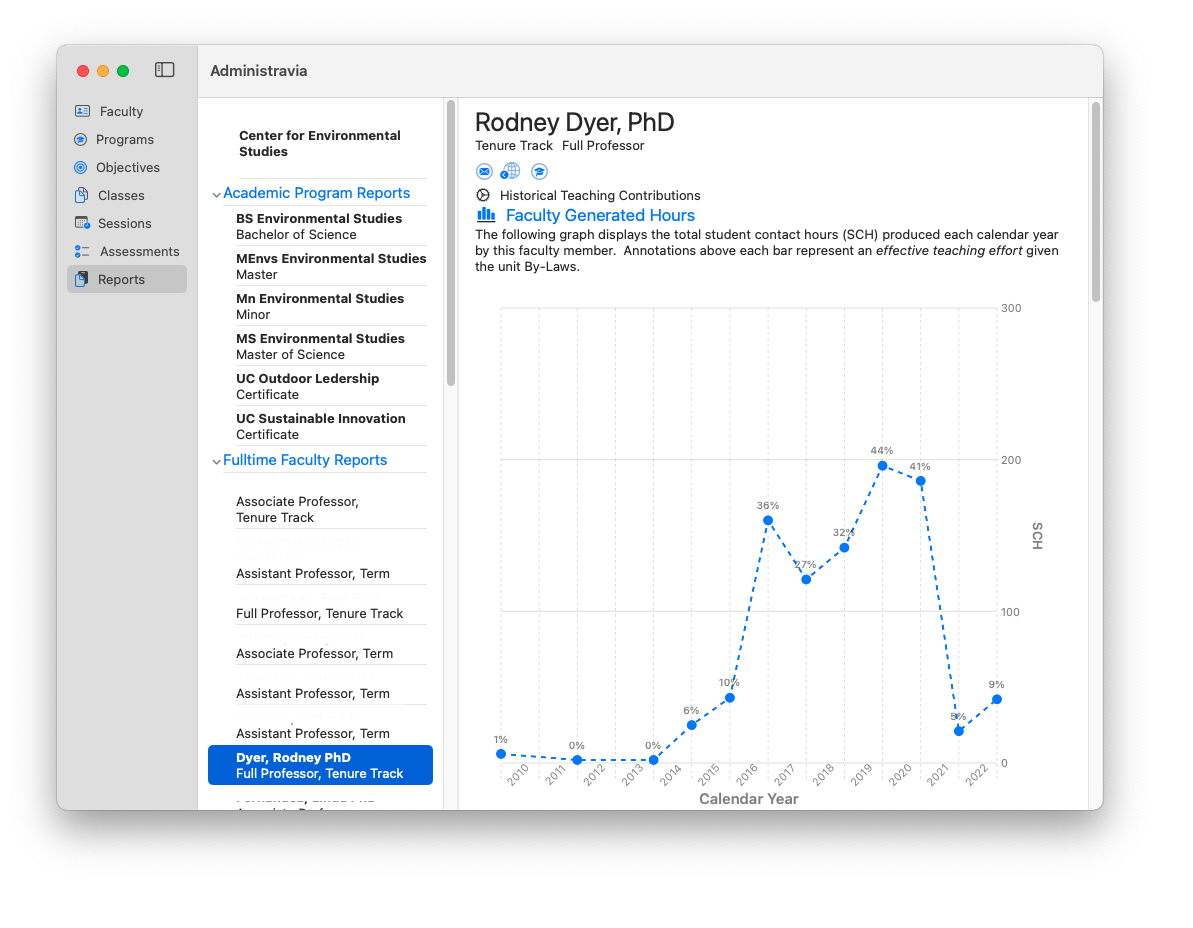

Annual Faculty Reports

Faculty annual reporting varies widely across groups, and individuals' efforts are allocated to research, teaching, and service activities. This portion of Administravia is currently under development and should be available to produce individual-based faculty annual review templates by early 2025.